部署

安装依赖

基础依赖

JDK 1.8 +

Maven 3.3.6

Git

Python

要求Python版本 2.6 or 2.7

cd /home/hadoop/software

wget https://www.python.org/ftp/python/2.7.6/Python-2.7.6.tgz

tar -zxvf /home/hadoop/software/Python-2.7.6.tgz -C /home/hadoop/app

cd /home/hadoop/app/Python-2.7.6/

./configure --prefix=/home/hadoop/app/Python-2.7.6/

make

make install

# 加入环境变量

vi ~/.bash_profile

export PYTHON_HOME=/home/hadoop/app/Python-2.7.6

export PATH=$PYTHON_HOME/bin:$PATH

pip

安装pip

mkdir ~/app/pip

cd ~/app/pip

curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py

python get-pip.py

pip安装相关Python包

pip install ipython

pip install ipdb

pip install werkzeug

pip install windmill

pip install logilab-astng

其它依赖

sudo yum install ant asciidoc cyrus-sasl-devel cyrus-sasl-gssapi cyrus-sasl-plain gcc gcc-c++ krb5-devel libffi-devel libxml2-devel libxslt-devel make mysql mysql-devel openldap-devel python-devel sqlite-devel gmp-devel

HUE准备

下载HUE:

http://archive.cloudera.com/cdh5/cdh/5/hue-3.9.0-cdh5.7.0.tar.gz

http://archive.cloudera.com/cdh5/cdh/5/hue-3.9.0-cdh5.7.0-src.tar.gz

上传至源码统一存放地址: /home/hadoop/source

解压

tar -xzvf /home/hadoop/source/hue-3.9.0-cdh5.7.0.tar.gz -C /home/hadoop/app

编译命令

cd /home/hadoop/app/hue-cdh5.7.0-release

make apps

集成

Hadoop

# HDFS

vi /home/hadoop/app/hadoop-2.6.0-cdh5.7.0/etc/hadoop/hdfs-site.xml

<property>

<name>dfs.namenode.http-address</name>

<value>hadoop000:50070</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

# YARN

vi /home/hadoop/app/hadoop-2.6.0-cdh5.7.0/etc/hadoop/core-site.xml

<property>

<name>hadoop.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hue.groups</name>

<value>*</value>

</property>

Hive

# 拷贝Hive的配置文件

cp /home/hadoop/app/hive-1.1.0-cdh5.7.0/conf/hive-site.xml /home/hadoop/app/hue-3.9.0-cdh5.7.0/desktop/conf

修改配置文件

vi /home/hadoop/app/hue-3.9.0-cdh5.7.0/desktop/conf/hue.ini

secret_key=8732A93E3166430080A4952F669C0332

http_host=hadoop000

http_port=8000

time_zone=Asia/Shanghai

default_hdfs_superuser=hadoop

[hadoop]

[[hdfs_clusters]]

[[[default]]]

fs_defaultfs=hdfs://hadoop000:9000

webhdfs_url=http://hadoop000:50070/webhdfs/v1

hadoop_conf_dir='/home/hadoop/app/hadoop-2.6.0-cdh5.7.0/etc/hadoop'

[[yarn_clusters]]

[[[default]]]

resourcemanager_host=hadoop000

resourcemanager_port=8032

submit_to=True

resourcemanager_api_url=http://hadoop000:8088

proxy_api_url=http://hadoop000:8088

history_server_api_url=http://hadoop000:19888

# 使用MySQL作为HUE运行库

[[database]]

engine=mysql

host=hadoop000

port=3306

user=root

password=12abAB

name=hue_desktop

## options={}

#RDBMS 支持

[[[mysql]]]

nice_name="MySql-MetaStore"

name=hive_basic

engine=mysql

host=hadoop000

port=3306

user=root

password=12abAB

#Spark支持

[spark]

# Host address of the Livy Server.

livy_server_host=hadoop000

# Port of the Livy Server.

livy_server_port=8998

# Configure livy to start in local 'process' mode, or 'yarn' workers.

livy_server_session_kind=yarn

# If livy should use proxy users when submitting a job.

livy_impersonation_enabled=true

# Host of the Sql Server

sql_server_host=hadoop000

# Port of the Sql Server

sql_server_port=10000

初始化 MySQL 库

#需要提前在MySQL创建库:hue_desktop (前面配的HUE MySQL运行库名)

/home/hadoop/app/hue-3.9.0-cdh5.7.0/build/env/bin/hue syncdb

/home/hadoop/app/hue-3.9.0-cdh5.7.0/build/env/bin/hue migrate

启动

# HUE 启动需要前置启动 1.hadoop 2.hiveserver2

#启动HUE

/home/hadoop/app/hue-3.9.0-cdh5.7.0/build/env/bin/supervisor

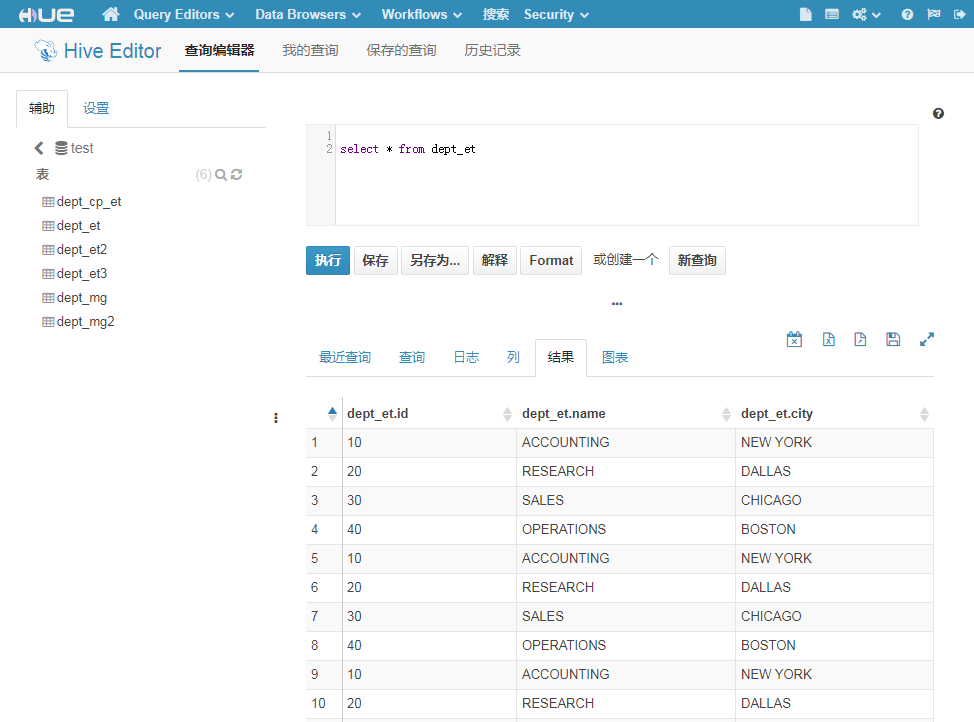

测试